Creating AnchorPoint: Vibecoding From Zero to Production (Part 3)

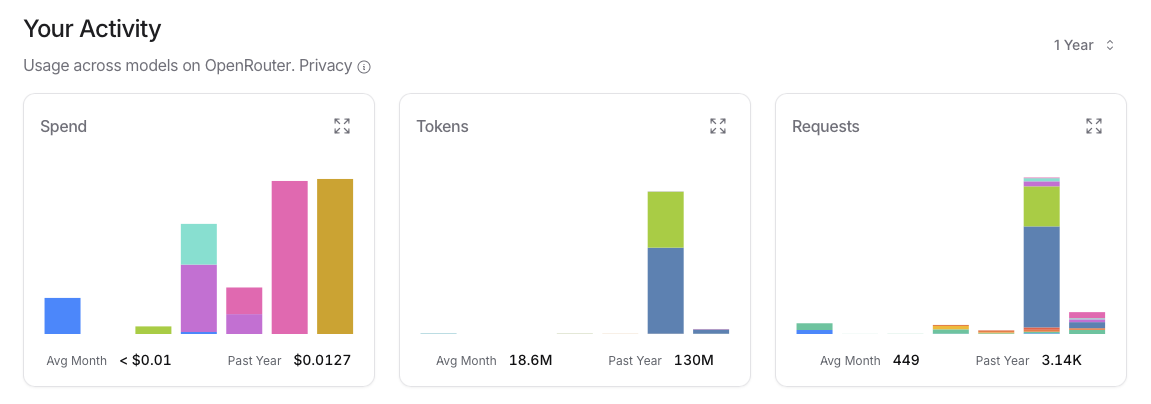

I hired a developer for $0.000003 an hour. Not a joke—that’s how AnchorPoint was built. I came at it like a project manager, not a coder. My “dev skills” peaked with some law school HTML/CSS hacks for a campaign site. JavaScript? That’s for people who debug without breaking a sweat. I did plenty of sweating.

But here’s the fun part: what if I treated AI like a junior engineer on my team? Prompt it with clear specs, iterate on feedback, and steer it through sprints? Could a non-coder like me actually ship a functional MVP?

Spoiler: Yes. And it was equal parts exhilarating, frustrating, and eye-opening. This post is the behind-the-scenes of that experiment—how I turned whiteboard scribbles into a live app using Cursor and Roo Code as my “dev team.” No prior experience required. Just stubbornness, strategy, and a willingness to let AI do the heavy lifting.

Backstory: From Whiteboard to Prompts

It started in our cramped living room in Valdosta, Georgia—right after our PCS to Moody. Anna was buried in patient notes, I was juggling freelance marketing gigs, and our PCS frustrations were still fresh. That 15-minute commute to nowhere? The sparse info on local bars and restaurants? It wasn’t going away. So I grabbed a whiteboard (the kind I used to use to map my project statuses and deadlines) and mapped out what AnchorPoint needed to be.

No tech jargon at first. Just plain English: “A simple search bar where a military spouse types ‘best neighborhoods near JB Lewis-McChord’ and gets clear, empathetic summaries—commute times, family vibes, hidden gems, all pulled from real sources like Reddit and base guides.”

From there, the magic (and the madness) of prompt engineering kicked in. Writing prompts isn’t like barking orders at a human dev—it’s more like drafting a legal brief. You have to be precise, anticipate ambiguities, and build in guardrails. My background in law and product management helped here; I’d spent years drafting contracts and specs that could stand up to some sort of scrutiny… allegedly.

That’s where my tool stack came together. I chose Cursor because it’s like VS Code on steroids—an IDE that integrates AI seamlessly, letting you chat with your code in natural language. It’s perfect for someone like me: “Hey, build a React component for a query bar,” and it generates, explains, and even refactors on the fly.

Pairing it with Roo Code was the game-changer. Roo is a structured prompting system (think of it as a recipe book for AI coding tasks), designed for iterative builds. It breaks complex projects into modular steps—explore the code, plan the changes, code incrementally, commit with docs. Why these two? Cursor handles the fluid, conversational flow (like pair-programming with a chatty colleague), while Roo keeps things disciplined, preventing me from wandering into “cool feature” rabbit holes like we discussed earlier in my previous piece. Together, they felt like a well-oiled dev duo: one creative, one methodical. I loved this- it reminded me of my time at Rock Paper Reality, balancing devs with creative scopes and marketing needs and customer requirements. I was in the zone.

OpenRouter and LibreChat rounded out the backend—routing prompts to the right models (like Claude or GPT variants) for specialized tasks, ensuring I wasn’t locked into one AI’s quirks. Setup took an afternoon: install Cursor, configure Roo templates, point the systems at OpenRouter models that fit their specific requirements. Boom. AI dev team assembled.

The Build: Collaborating with AI-as-Engineer

Treating AI like a junior engineer wasn’t just a metaphor—it shaped my entire workflow. I’d “assign tasks” via prompts, review the output, and iterate. No micromanaging water cooler chats; just code diffs and refinements.

Let’s walk through a real iteration, because theory is boring and practice is where the stories live.

Version 1: The Rough Draft

I started with the core: the query bar. Prompt to Cursor/Roo: “Using React, Vite, and Tailwind from DESIGN_SYSTEM.md, build a simple search component. It should accept input, submit to a Perplexica API via a custom hook, and display results in a card layout with loading spinner. No auth, and keep it stateless.”

What worked: The frontend spun up beautifully. A clean input field, styled per my design with sans-serif fonts, subtle blues evoking trust and calm (navy for headers, soft teal for accents, inspired by military branch colors but softer for spouses). The usePerplexicaQuery hook encapsulated the API call perfectly, pulling env vars without hardcoding secrets.

What broke: Results rendering. The AI generated a basic list, but it was raw JSON vomit—ugly, unparsed, and overwhelming. No empathy in the output; it just dumped text blocks. And error handling? Forget it. One bad API response, and the whole app tanked with a white screen.

Enter the debugging loop. I’d describe the issue in plain speak: “The results are coming back as a blob of text. Parse it into sections: Housing, Commute, Community. Add friendly headers like ‘What to Expect on the Drive In’ and bullet points. If no results, show ‘We couldn’t find specifics yet—try rephrasing?’”

Roo Code really flexed on this, structuring the fix into steps:

- Explore: Analyze current code.

- Plan: Refactor the response parser.

- Code: Update the component with conditional rendering.

- Commit: “Fixed results parsing for better UX.”

Key insights I gleaned were fast and furious. This felt less like brute-force coding and more like collaboration. I’d nudge, the AI would adjust, and suddenly the app started “feeling” right. Empathetic, like a conversation with a knowledgeable base newcomer. Model tweaking got us to the real benefits- and I’m still playing with it now. Instruct models are great at following… instructions, but aren’t as sweet and compassionate. Friendly chat-focused models love to help out so they’ll just make shit up. Did you know there was a school, restaurant, and job center for your spouse LITERALLY right next door to where you were moving? Well if you asked V 0.2 it would’ve sworn your new house had a daycare right inside your apartment complex. I wouldn’t bank on it.

Then, the requirements drift. In one early build, I asked for “community insights,” and the AI interpreted it as pulling random Twitter feeds. Not helpful for a military spouse needing vetted, base-specific advice. I had to reel it in: “Focus on military forums, Reddit r/military, and OneSource—filter for recency and relevance.” Prompt refinement became my superpower.

Failures, Misfires, and Honest Iteration

Look, this wasn’t all smooth sailing. If I made it sound like AI is a magic wand, I’d be lying. Building AnchorPoint had its share of facepalms, late-night curses, and “why did I think this was a good idea?” moments.

One epic misfire: I prompted for mobile responsiveness, but the AI built a fixed-width layout that broke on my phone, tablet, and actually then later on my computer AT THE SAME TIME, after it already worked on my computer before. (Pro tip: Always test on actual devices, not just the emulator.) Another time, integrating the Perplexica API led to CORS errors; cross-origin headaches that had me Googling basics I hadn’t touched since our days at RPR testing my highlight project of rolling out a CDN for our heavy 3D assets for AR/XR content. The AI suggested fixes, but I had to iterate three times before it clicked: add an “on-site” proxy layer to handle requests server-side.

Misunderstood prompts were the real villains. “Style the results like a blog post” turned into overly verbose, flowery prose—think Shakespeare meets Zillow. Not the concise, actionable summaries military families need during PCS chaos. I stepped in as PM: “Redirect—keep it bullet-point practical, 200 words max, tone reassuring but direct.”

What made it authentic? Documenting the flops. I kept a running log in a Notion page (call it my “AI Post-Mortem Journal”): “Prompt X led to Y bug; fixed by adding Z constraint.” Each failure was an agile sprint retro in miniature—reflect, re-prompt, rebuild. And humor helped: After one particularly stubborn Tailwind glitch, I joked in my notes, “Qwen Code is like that eager intern who codes fast but forgets to document and refuses to change anything after a sprint. Let’s try again.”

These iterations weren’t setbacks; they were progress. By failure five or six, I was faster at spotting drift, and the app evolved from a clunky prototype to something polished.

Lessons Learned: PMing Your AI Dev Team

Steering AI through a build taught me more about project management than any of my old SaaS roles, oddly enough. Here’s the distilled wisdom:

-

Prompting is requirements refinement on steroids. Vague specs lead to vague code. I learned to layer prompts: Start broad (“Build the search UI”), then drill down (“Use these exact Tailwind classes: bg-teal-100 for cards”). We all like to pretend a team can be given the full project scope and turn it into a finished product but I knew human devs can’t ages ago; why did I think AI dev could?

-

Debugging feels like pair programming. Instead of staring at errors alone, I’d chat: “Why is this hook re-rendering infinitely?” The AI would explain (often with code comments), and we’d fix it together. It’s collaborative, not combative. Lean into the QA process the way you would with a proper QA team- you have them present you with the whole why/when/how of a bug, so why did I expect an AI model to fix a problem with just “ew it’s all blank now, what did you do?”

-

AI’s superpower is elasticity. It never complains about scope changes, overtime, or “one more tweak.” Want to pivot from JSON parsing to markdown rendering? Done in minutes. But that speed comes with a catch: You still need human judgment to keep things aligned. Without my PM lens—prioritizing empathy over features—we’d have ended up with a flashy but useless tool.

In short though, AI didn’t make me a developer. It amplified my strengths as a strategist and integrator, letting me focus on the why (solving PCS pain) while it handled the how (the code).

What This Means for Development Workflows

Here’s the bigger picture: AI won’t replace engineers anytime soon. I’ve been working with professional and seriously talented engineers for years now and they bring creativity, context, and that irreplaceable human spark. But for PMs, marketers, or anyone with a product idea and no dev budget? Tools like Cursor and Roo Code are a force multiplier. You don’t need to be a JS guru to ship; you need to guide AI like you guide people—clear specs, honest feedback, relentless iteration.

For military spouses with a few skills in their back pocket like me, this leveled the playing field. If I can build AnchorPoint—a scrappy, human-first tool to ease PCS stress—imagine what you could create for your own challenges. It’s empowering, democratizing, and honestly, a little addictive.

We’re live now, and already getting queries from families at bases like Fort Liberty and NAS Pensacola- literally 2 hours from our terrible base at Moody in Georgia. The analytics don’t lie and it brings me SO much joy. But it works because it’s built with real frustration in mind, iterated with AI’s help, and launched with a spouse’s heart. My wife is a goddess, and I will follow her forever; but thank god I found a way to make my skills translate into this weird, new world.

Next up: Part 4, where I dive into deployments, debug logs, and getting this thing from my laptop to the cloud—without a full IT team.

Stay building,

—Chris

AnchorPoint is free and growing—try a search at anchorpoint.my and let me know how it helps (or doesn’t).

Posts in this series

- Creating AnchorPoint: Vibecoding From Zero to Production (Part 3)

- Developing AnchorPoint: From Frustration to Blueprint (Part 2)

- Developing AnchorPoint: Our Military Family Story (Part 1)